Most people will have heard of artificial intelligence (AI). For some, it might conjure up images of a cute robot in a Hollywood movie; for others, a dystopian world overtaken by machines. It is less likely, however, to be regarded in the context of today’s cyber world.

AI is the engine behind cyber technology: it enables people to have a great online experience by delivering intuitive offerings, automated services and a more personalised engagement with brands and providers. It also powers the security products and services that protect people online, detecting threats in real time and preventing cyberattacks. This may not be as tangible as the little robot on the silver screen, but it’s critically important to our online lives.

Unfortunately, AI’s far-reaching potential isn’t only utilised by the good guys: increasingly, nefarious actors are developing more sophisticated attacks, harnessing AI algorithms and machine learning technology to automate and increase the scope of harmful programs. And while rudimentary AI-like capabilities have been used to commit unlawful activities for decades, increased automation has led to an exponential rise in the volume of attacks. As a result, cybercrime is now a multibillion-dollar business.

Machine power

At Avast, we use AI to protect hundreds of millions of people online through our security solutions. Our wide user base means that our algorithms are constantly fed new threat information. Just as cybercriminals can use AI to teach their malware to fool security systems, our solutions are learning to identify and stop new threats.

The rapid uptake of IoT devices has significantly expanded the cyberthreat landscape, putting people at greater risk

In order to be effective, machine learning and deep learning technologies need to harvest huge amounts of data and develop robust pattern-recognition links that make connections to known malware. This in turn enables them to identify or flag suspect and unknown software for further investigation. All of this occurs in a matter of seconds.

Speed is one of the key advantages of using machine learning within security processes, as malicious programs spread and evolve extremely quickly. While most threats have a short lifespan, they often attempt to morph into something else in order to avoid automatic detection systems. This means data needs to be processed at great pace to keep up. Put simply, machines can act much faster than the human analysts trying to monitor them, so it’s important to fight fire with fire.

There are limits to AI, of course, and this is why automation is still not perfect: algorithms cannot determine the nature of a file, for example. Instead, a human is required to step in and be the detective. At Avast, we quarantine unidentifiable files or links and assign a security researcher to investigate them. Once a decision has been made regarding the software in question, the information is fed into the machine learning program, expanding its knowledge base and adding to its identification capability. It’s worth noting that the same limitations apply to the nefarious actors working hard to improve their code and outwit the ‘good’ AI.

Spear phishing – the request for confidential information via a fraudulent email that claims to represent a trusted entity – is a very real example of a long-established threat that has been improved by the use of AI technology. Here, AI can adapt and personalise the copy of each email, enabling cybercriminals to reach a much wider audience in a shorter time frame and increasing the likelihood of convincing unwitting victims to respond.

Cybercriminals are also using machine learning technologies to try to fool detection or antivirus systems. This is a game of cat and mouse in which cybercriminals are always trying to get ahead of cybersecurity experts, and cybersecurity experts are trying to detect patterns that may prevent future attacks from happening.

Connected chaos

Botnets – such as the widely publicised Mirai botnet that took down internet service provider Dyn in 2016 – also leverage AI to create stealthier ways of communicating with command and control servers. Unfortunately, most antivirus programs will not spot botnets as they often appear as innocent internet traffic on the system. We recently discovered a new botnet called Torii, which specifically targets Internet of Things (IoT) devices.

The rapid uptake of IoT devices in the home and office has significantly expanded the cyberthreat landscape, putting people at greater risk on a personal level. As our lives become more convenient, they also present new opportunities for cybercriminals to attack.

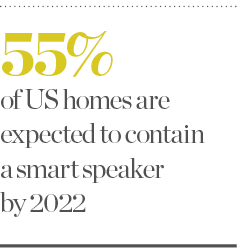

IoT devices, such as Google Home or Amazon Echo, are growing in popularity: Juniper Research predicts that these smart speakers will be in 55 percent of US homes by 2022. Whether people are using them to wake up for work, play music or buy items online, smart speakers will, over time, gather and hold valuable personal data about the habits of the household. This makes them a very attractive target for cybercriminals interested in stealing money or personal information.

Cheaper smart speakers are of most concern to cybersecurity experts. While budget-friendly alternatives are designed to be as convenient as possible, they are generally not built with security in mind, so their default settings are weak. One of the first things a user will do is link various accounts to their new smart speaker using the device’s default settings.

These seemingly innocent actions can have major consequences: without secure logins, which would require the device to verify that each command is being made by the owner, a smart speaker will read emails aloud or place orders regardless of whom is asking it to. This means that family members – or anyone visiting a home containing a smart speaker, welcome or not – can draw personal information from the device.

Cybercriminals don’t necessarily need to be within range of a smart speaker to make it act on their behalf, nor do they have to hack the device itself. Instead, attackers can break into a network through a vulnerable router and hack other IoT devices connected to it from there. Hacking a vulnerable smart device that can play recordings enables cyberattackers to talk to a smart speaker – and if it has been set up without effectual security settings, it will do whatever anyone tells it to.

This could even allow a criminal to physically enter the home: if a smart door lock is connected to a smart speaker, for example, a burglar could either command the smart speaker through a window to open the lock, or hack into the home network and have another device command the smart speaker to unlock the front door. Last year, an exploit called BlueBorne allowed anyone within range of a Bluetooth-enabled device to take control of said device, providing they had the right tools – tools that were readily available to the public.

The great unknown

There is also concern about vulnerabilities we haven’t found. Weaknesses often come to light years after the fact, meaning IoT devices could already contain vulnerabilities – we just don’t know it yet. EternalBlue – another exploit – is the perfect example: having existed in Windows software as far back as Windows XP, it only became widely known in 2017 when it allowed attackers to carry out the largest ransomware attack in history, known as WannaCry.

The more we live out our days online and surround ourselves with IoT devices like smart speakers, the more motivation criminals will have to target us in the cyber world. Fortunately, feeding real-time threat data gathered from a global user base of millions into our AI machine helps us to protect people from threats that are not yet known. It’s the powerful combination of man and machine that allows us to effectively tackle cybercrime.